Bag of Words Models

What Are Bag of Words Models?

In all of my tutorials thus far, we’ve worked with algorithms trained on datasets containing mostly numeric values; moreover, the only textual inputs have been categorial and could thus be encoded using the one hot encoding method.

In practical applications, however, many datasets often contain non-categorical textual inputs that often pose a problem as such inputs cannot be processed by optimization algorithms such as gradient descent. Thus, we must use a variety of natural language processing methods to convert textual input into numbers.

Though this may initially seem like a daunting task, it can easily be accomplished with the use of bag of words models, which are sparse arrays containing integer values usually close to 0.

How Do Bag of Words Models Work?

Text Preprocessing

To understand bag of words models, let’s first take an instance of a sample input text.

The dog refused to share the bone with the other dogs.

To make the bag of words model work well, we usually remove stopwords from the input text. Stopwords are words that don’t provide much additional meaning to a sentence. Usually, natural language processing words provide us with a list of stopwords so that we can iterate over the dataset and remove them.

In addition to removing the stopwords, we must make turn each letter into lowercase and remove any extraneous symbols, numbers, or punctuation. Finally, we must replace each word with its stem. For example:

ate —> eat

gnawed —> gnaw

loved —> love

The reason for this will become more apparent later in the article. After completing all our processing, our input text might look something like this:

dog refuse share bone other dog

Enumerating Word Counts

The main idea behind bag of words models revolves around the concept of unique word enumeration. In simpler terms, a bag of words models is an array with one column for each unique word in the original input array. If the above sample text was the only text present in an input array, for example, our bag of words model would have five columns as there are five unique words.

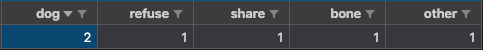

In each row would be the number of those unique words represented by the column. To conceptualize better, let’s take a look at the array below. It is a simple bag of words model built off the sample text preprocessed in the previous section.

As we can see, the string of data has been replaced by a row containing five elements. Each element shows how many times the corresponding word was found in the input text. For example, since dog had two instances, the number 2 is inputted under the dog column.

Now that we have a general idea of how bag of words models work, let’s take a look at a slightly larger example with several rows of data. To try and mimic a real-life machine learning dataset, we will include a label column as well.

Bag of Words Real-Life Application Example

Let’s pretend that we were given the task of creating a classifier to categorize textual reviews into either of two categories:

Happy reviews (0)

Angry reviews (1)

To train our algorithm, we are given the dataset below:

Sample dataset

Let’s put this dataset through the process of text cleaning. This will result in a dataset containing only the cleaned text on each line. The majority of bag of words algorithms expect such corpora (plural for corpus).

To review, let’s go through the steps of text cleaning in order:

Remove superfluous characters such as numbers and punctuation.

Convert each letter into its lowercase form.

Replace each word with its stem form.

If we were to implement all these steps in code, we would have a corpus similar to the following:

Corpus containing cleaned text

Now we have a corpus containing text in a good format for creating a bag of words model. First, we must create a column for each unique word in the corpus. It’s important to remember that there should not be any duplicate columns.

Here is the list of unique words in the dataset above: restaurant, totally, amazing, love, service, i, will, never, go, again, rude, employees, very, memorable, eat, here

The columns of our bag of words model are created, so now we must count the number of instances of each word for each row in the corpus. After enumerating and inputting these values into the corpus, we should produce an array like that below.

As you can see we have an array containing only numeric values (ignoring the headers) that can be inputted into a machine learning classification algorithm. To train a supervised-learning algorithm, of course, we must pass in the labels along with the bag of words model (which are usually inputted as another parameter).

After fully understanding bag of words models, you may be amazed with their simplicity in natural language processing—though this is a key advantage of such models, there are disadvantages that must be also be taken into consideration. The next section will provide an overview of these disadvantages and alternatives that can be used to overcome them.

The Disadvantages of and Alternatives to Bag of Words

As you may have guessed, bag of words models can become extremely large in size when used for datasets containing thousands of input texts. This can result in extremely long training times that require large amounts of processing power.

To combat this, Term Frequency-Inverse Document Frequency (TF-IDF) was created. Though I won’t further discuss the algorithm in this article, I encourage you to check out the article created by Analytics Vidhya.

Another downside to bag of words models is that they lose information concerning word order and semantics, which play a large role in advanced classification problems. To capture these aspects of text, the Word2Vec model was created. On a high level, Word2Vec is a neural network that “learn[s] word associations from a large corpus of text”, according to Wikipedia. This is a state-of-the-art model that is often used in most modern natural language processing projects.

Even with these disadvantages, though, bag of words is still a useful text processing method in that it offers a simple solution to converting inputs from textual to numeric.

For a more advanced example of bag of words in practical use, please check out my article on Real or Fake Disasters.

Thanks for reading!